Gestures for Accessible Digital Musical Instruments

When playing musical instruments, deaf and hard-of-hearing (DHH) people typically sense their music from the vibrations transmitted by the instruments or the movements of their bodies while performing. Sensory substitution devices now exist that convert sounds into light and vibrations to support DHH people’s musical activities. However, these devices require specialized hardware, and the marketing profiles assume that standard musical instruments are available. Hence, a significant gap remains between DHH people and their musical performance enjoyment. To address this issue, this study identifies end users’ preferred gestures when using smartphones to emulate the musical experience based on the instrument selected. This gesture elicitation study applies 10 instrument types. Herein, we present the results and a new taxonomy of musical instrument gestures. The findings will support the design of gesture-based instrument interfaces to enable DHH people to more directly enjoy their musical performances.

Members: Ryo Iijima, Akihisa Shitara, Yoichi Ochiai

Smartphone Drum

Smartphone applications that allow users to enjoy playing musical instruments have emerged, opening up numerous related opportunities. However, it is difficult for deaf and hard of hearing (DHH) people to use these apps because of limited access to auditory information. When using real instruments, DHH people typically feel the music from the vibrations transmitted by the instruments or the movements of the body, which is not possible when playing with these apps. We introduce “smartphone drum,” a smartphone application that presents a drum-like vibrotactile sensation when the user makes a drumming motion in the air with their smartphone like a drumstick. We implemented an early prototype and received feedback from six DHH participants. We discuss the technical implementation and the future of new instruments of vibration.

Members: Ryo Iijima, Akihisa Shitara, Sayan Sarcar, Yoichi Ochiai

Word Cloud for Meeting

Deaf and hard of hearing (DHH) people have limited access to auditory input, so they mainly receive visual information during online meetings. In recent years, the usability of a system that visualizes the ongoing topic in a conference has been confirmed, but it has not been verified in a remote conference that includes DHH people. One possible reason is that visual dispersion occurs when there are multiple sources of visual information. In this study, we introduce “Word Cloud for Meeting,” a system that generates a separate word cloud for each participant and displays it in the background of each participant’s video to visualize who is saying what. We conducted an experiment with seven DHH participants and obtained positive qualitative feedback on the ease of recognizing topic changes. However, when the topic changed in a sequence, it was found to be distracting. Additionally, we discuss the design implications for visualizing topics for DHH people in online meetings.

Members: Ryo Iijima, Akihisa Shitara, Sayan Sarcar, Yoichi Ochiai

See-Through Captions

Real-time captioning is a useful technique for deaf and hard-of-hearing (DHH) people to talk to hearing people. With the improvement in device performance and the accuracy of automatic speech recognition (ASR), real-time captioning is becoming an important tool for helping DHH people in their daily lives. To realize higher-quality communication and overcome the limitations of mobile and augmented-reality devices, real-time captioning that can be used comfortably while maintaining nonverbal communication and preventing incorrect recognition is required. Therefore, we propose a real-time captioning system that uses a transparent display. In this system, the captions are presented on both sides of the display to address the problem of incorrect ASR, and the highly transparent display makes it possible to see both the body language and the captions.

Members: Akihisa Shitara, Kenta Yamamoto, Ippei Suzuki, Ryosuke Hyakuta, Ryo Iijima, Yoichi Ochiai

Transparent display provided by Japan Display Inc.

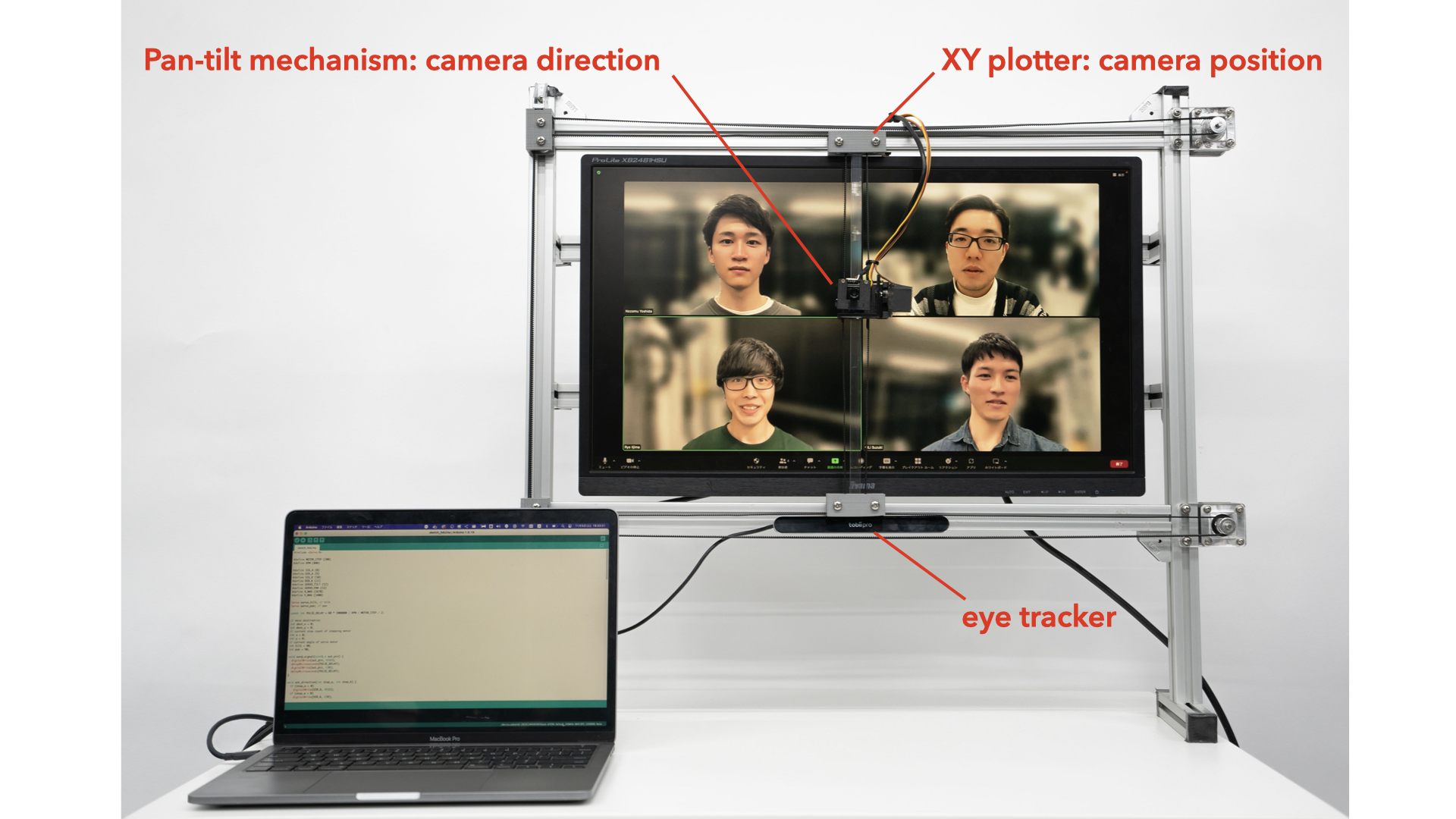

Eye Contact Framework toward Improving Gaze Awareness in Video Conferences

Gaze information plays an important role as non-verbal information in face-to-face conversations. However, in online videoconferences, users’ gaze is perceived as misaligned due to the different positions of the screen and the camera. This problem causes a lack of gaze information, such as gaze awareness. To solve this problem, gaze correction methods in videoconference have been extensively discussed, and these methods allow us to maintain eye contact with other participants even in videoconference. However, people rarely make constant eye contact with the other person in face-to-face conversations. Although a person’s gaze generally reflects their intentions, if the system unconditionally corrects gaze, the intention of the user’s gaze is incorrectly conveyed. Therefore, we conducted a preliminary study to develop an eye contact framework; a system that corrects the user’s gaze only when the system detects that the user is looking at the face of the videoconferencing participant. In this study, participants used this system in a online conference and evaluated it qualitatively. As a result, this prototype was not significant in the evaluation of gaze awareness, but useful feedback was obtained from the questionnaire. We will improve this prototype and aim to develop a framework to facilitate non-verbal communication in online videoconferences.

Members: Kazuya Izumi, Shieru Suzuki, Ryogo Niwa, Atsushi Shinoda, Ryo Iijima, Ryosuke Hyakuta, Yoichi Ochiai

EmojiCam (2021)

This study proposes the design of a communication technique that uses graphical icons, including emojis, as an alternative to facial expressions in video calls. Using graphical icons instead of complex and hard-to-read video expressions simplifies and reduces the amount of information in a video conference. The aim was to facilitate communication by preventing quick and incorrect emotional delivery. In this study, we developed EmojiCam, a system that encodes the emotions of the sender with facial expression recognition or user input and presents graphical icons of the encoded emotions to the receiver. User studies and existing emoji cultures were applied to examine the communication flow and discuss the possibility of using emoji in video calls. Finally, we discuss the new value that this model will bring and how it will change the style of video calling.

Members: Kosaku Namikawa, Ippei Suzuki, Ryo Iijima, Sayan Sarcar, Yoichi Ochiai

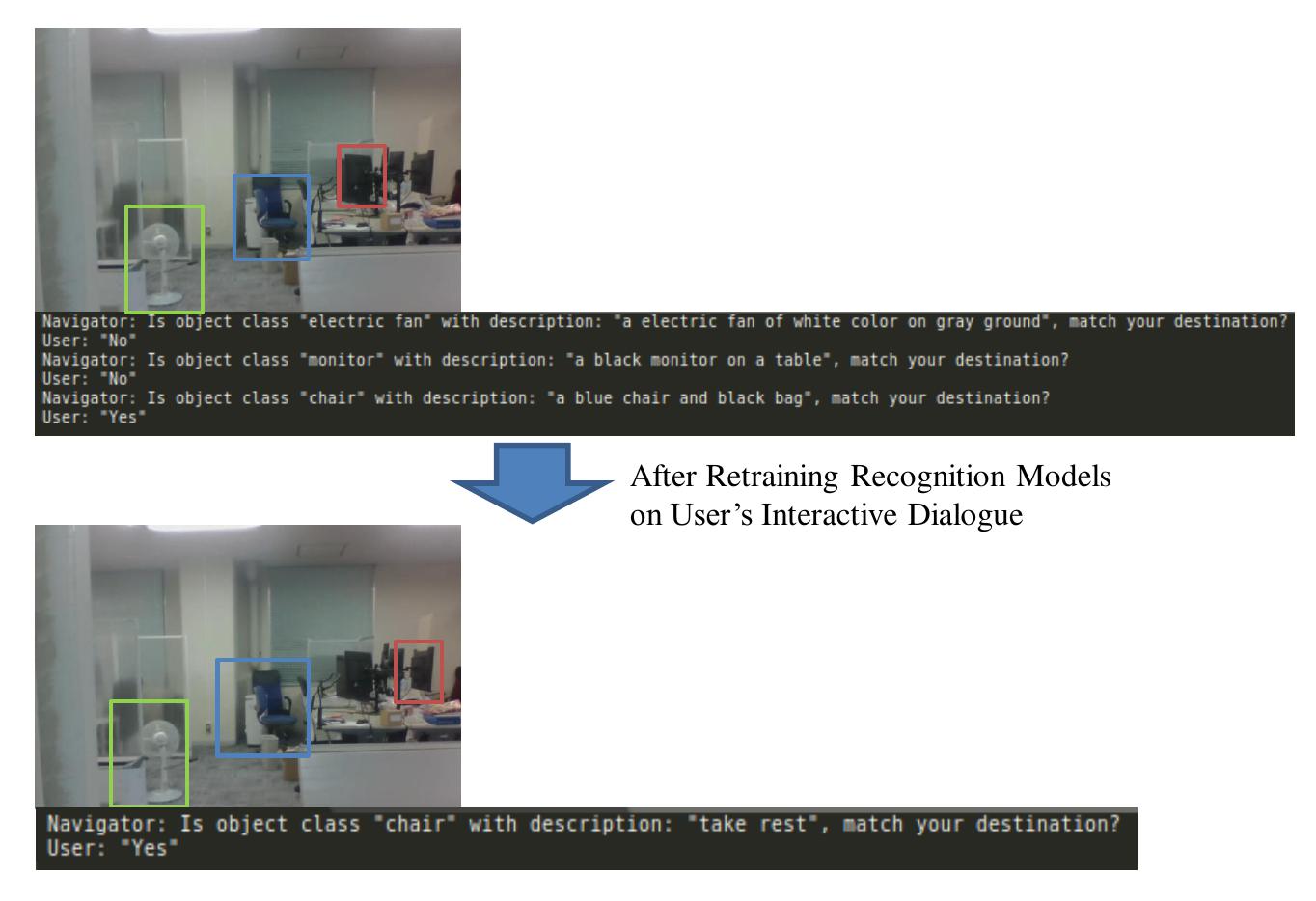

Personalized Navigation

Indoor navigation systems guide a user to his/her specified destination. However, current navigation systems face challenges when a user provides ambiguous descriptions about the destinations. This can commonly happen to visually impaired people or those who are unfamiliar with new environments. For example, in an office, a low-vision person asks the navigator by saying “Take me to where I can take a rest?”. The navigator may recognize each object (e.g., desk) in the office but may not recognize which location the user can take a rest. To overcome the gap of surrounding understanding between low-vision people and a navigator, we propose a personalized interactive navigation system that links user’s ambiguous descriptions to indoor objects. We build a navigation system that automatically detectS and describeS objects in the environment by neural-network models. Further, we personalize the navigation by re-training the recognition models based on previous interactive dialogues, which may contain the corresponding between user’s understanding and the visual images or shapes of objects. Additionally, we utilize a GPU cloud for supporting computational cost and smooth navigation by locating user’s position using Visual SLAM. We discussed further research on customizable navigation with multi-aspect perceptions of disabilities and the limitation of AI-assisted recognition.

Members: Jun-Li Lu, Hiroyuki Osone, Akihisa Shitara, Ryo Iijima, Bektur Ryskeldiev, Sayan Sarcar, Yoichi Ochiai